The customer’s application was deployed on AWS EC2 (RHEL) instances, with a strong requirement for high compliance standards and efficient, responsive autoscaling. Since their environment spans both AWS and AWS GovCloud, it was essential that the solution remain fully AWS-native.

As a trusted cloud consulting company, CloudifyOps delivered a robust solution using the Golden Amazon Machine Image (AMI) approach. Through our cloud management services, we implemented an automated pipeline using AWS CodePipeline to create CIS-hardened AMIs. These AMIs were pre-configured with the necessary application dependencies and code, enabling seamless and faster autoscaling by launching instances from a fully baked and secure image.

A Golden AMI is a pre-configured, fully tested machine image that includes the operating system, security configurations, required software packages, and customizations specific to organizational needs. This not only ensures compliance and security but also drives consistency and speed across deployments.

In this blog, we walk through how our cloud consulting company leveraged AWS-native tools and provided end-to-end cloud management services to help the customer improve compliance, reduce instance launch time, and enhance the scalability of their infrastructure.

Let’s look at the challenges faced and how we overcame them.

As the customer wanted the solution to be AWS native, our obvious choice was to go with AWS DevOps.

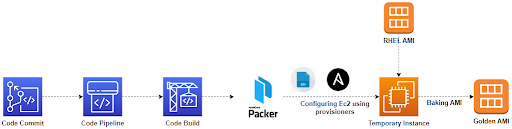

The above diagram shows the pipeline flow and the components used. We used the AWS Code Commit for hosting the code, AWS Code Pipeline as the CI platform and AWS Code Build as our build tool. The pipeline was designed to be triggered when a PR is merged with the master branch of the Code Commit repo, which becomes our first stage. Following that, we have the build stage where we bake out the CIS hardened Code deployed AMI. During this stage, the Code Build will invoke Packer, Packer will then provision a temporary instance that will run the shell scripts and the Ansible.

We used the Code Build as our build platform. For baking the AMI we have used the combination of Ansible and Packer. The buildspec.yaml is responsible for validating the packerfile, initiating the packer build, and once the build is completed, it will send out a notification to the stakeholders with the details of the AMI, basically the ami id. Below, we have the buildspec.yaml.

version: 0.2

phases:

pre_build:

commands:

— echo “Installing Packer”

— curl -o packer.zip https://releases.hashicorp.com/packer/1.3.3/packer_1.3.3_linux_amd64.zip && unzip packer.zip

— echo “Validating ${BUILD_TYPE} Packer template”

— ./packer validate packer_${BUILD_TYPE}.json

build:

commands:

— ./packer build -color=false packer_${BUILD_TYPE}.json | tee build.log

post_build:

commands:

— egrep “${AWS_REGION}:sami-” build.log | cut -d’ ‘ -f2 > ami_id.txt

# Packer doesn’t return non-zero status; we must do that if Packer build failed

— test -s ami_id.txt || exit 1

— sed -i.bak “s/<>/$(cat ami_id.txt)/g” ami_builder_event.json

— aws events put-events — entries file://ami_builder_event.json — region $AWS_REGION

— echo “build completed on `date`”

— aws s3 cp packages.csv s3://$BUILD_OUTPUT_BUCKET/$BUILD_TYPE/

artifacts:

files:

— ami_builder_event.json

— build.log

discard-paths: yes

Here, we have the pre-build stage where we install Packer and validate the file, build stage where we invoke Packer and build out the AMI and the post build stage where we add conditions/logic to handle build failure, sending out notification to the stakeholders. Finally, we save the logs and SNS event body as an artifact.

We have the Packer doing the crucial work for us or let’s say the heavy lifter in the team. On being invoked by the Code Build, Packer provisions a temporary instance. By using the Ansible and shell provisioners, Packer hardens the instance, deploys the necessary packages and code into it. Once the provisioning is done, Packer bakes the golden AMI and registers it in the AWS account. Post this, Packer will terminate the temporary instance. Below, we have the sample packer.json file which is responsible for the entire execution/ AMI baking.

{

“variables”: {

“vpc”: “{{env `BUILD_VPC_ID`}}”,

“subnet”: “{{env `BUILD_SUBNET_ID`}}”,

“aws_region”: “{{env `AWS_REGION`}}”,

“ami_name”: “Base-{{isotime ”02-Jan-06 03_04_05”}}”

},

“builders”: [

{

“name”: “AWS AMI Builder”,

“type”: “amazon-ebs”,

“region”: “{{user `aws_region`}}”,

“source_ami_filter”: {

“filters”: {

“virtualization-type”: “hvm”,

“name”: “RHEL-7.9*-x86_64-*”,

“root-device-type”: “ebs”

},

“owners”: [“******”],

“most_recent”: true

},

“instance_type”: “t2.small”,

“ssh_username”: “ec2-user”,

“ami_name”: “{{user `ami_name` | clean_ami_name}}”,

“tags”: {

“Name”: “{{user `ami_name`}}”

},

“run_tags”: {

“Name”: “{{user `ami_name`}}”

},

“run_volume_tags”: {

“Name”: “{{user `ami_name`}}”

},

“snapshot_tags”: {

“Name”: “{{user `ami_name`}}”

},

“ami_description”: “RHEL 7 with Cloudwatch and Wazuh agent”,

“associate_public_ip_address”: “true”,

“vpc_id”: “{{user `vpc`}}”,

“subnet_id”: “{{user `subnet`}}”

}

],

“provisioners”: [

{

“type”: “file”,

“source”: “tools/rhel7_ami_preparation.sh”,

“destination”: “/home/ec2-user/”

},

{

“type”: “ansible-local”,

“staging_directory”: “/home/ec2-user/ansible-staging/”,

“playbook_file”: “ansible/playbook-base.yaml”,

“playbook_dir”: “ansible”,

“galaxy_file”: “ansible/requirements_base.yaml”,

“clean_staging_directory”: true

},

{

“type”: “shell”,

“remote_folder”: “/home/ec2-user/”,

“inline”: [

“rm -rf rhel7_ami_preparation.sh”

]

}

]

}

When we began working on the solution, the main challenge was to design the AMI pipelines, identifying the common packages for all 6 AMIs so that those could go into the base golden AMI. The customer environment had 4 application components and two security tools as part of the infrastructure, totaling up to 6 AMIs.

We had the base AMI CIS hardened, above which we were deploying the application and security tools. So we decided to create a base golden AMI which will be CIS hardened and would have the common components like CloudWatch and Wazuh agent, among others. This AMI will be used as the base AMI for all other AMIs.

As we had a lot of moving parts in the solution, we wanted to address the possible infrastructure drifts, which might pop up while replicating the pipelines across all components and both the AWS and AWS Govcloud account. We wanted to automate the provisioning of the entire setup, which includes the Code Commit, Code Build, Code Pipeline, SNS topic and the required IAM roles. We had CFN templates, the AWS way of automating infrastructure provisioning, in place, that provisioned and integrated the components. This small step that we took in the initial phase of the project gave us a big push in the project implementation. Below, we have a small part of the template where we define the codebuild.

CodeBuildProjectBase:

Type: AWS::CodeBuild::Project

Properties:

Name: !Sub ‘${ServiceNameBase}_build’

Artifacts:

Type: CODEPIPELINE

Environment:

Type: LINUX_CONTAINER

ComputeType: BUILD_GENERAL1_SMALL

Image: !Sub ‘aws/codebuild/${CodeBuildEnvironment}’

EnvironmentVariables:

— Name: BUILD_OUTPUT_BUCKET

Value: !Ref BuildArtifactsBucket

— Name: BUILD_VPC_ID

Value: !Ref BuilderVPC

— Name: BUILD_IAM_ROLE

Value: !Ref BuilderIAMRole

— Name: BUILD_SUBNET_ID

Value: !Ref BuilderPublicSubnet

— Name: BUILD_TYPE

Value: base

ServiceRole: !GetAtt CodeBuildServiceRole.Arn

Source:

Type: CODEPIPELINE

In the Cloud and DevOps world, without automation we’d be trapped in a tech comedy of errors.

This implementation was the AWS way. We implemented the Golden AMI pipeline in the open source way for another customer, which will be discussed in the second part of this blog.

To know more about how the CloudifyOps team can help you with security compliance solutions, write to us today at sales@cloudifyops.com

CloudifyOps Pvt Ltd, Ground Floor, Block C, DSR Techno Cube, Survey No.68, Varthur Rd, Thubarahalli, Bengaluru, Karnataka 560066

CloudifyOps Pvt Ltd, Cove Offices OMR, 10th Floor, Prince Infocity 1, Old Mahabalipuram Road, 50,1st Street, Kandhanchavadi, Perungudi, Chennai, Tamil Nadu - 600096

CloudifyOps Inc.,

200, Continental Dr Suite 401,

Newark, Delaware 19713,

United States of America

Copyright 2024 CloudifyOps. All Rights Reserved