Our customer from the healthcare industry had microservices-based applications deployed in EKS, with multiple non-production environments for regression testing, sandbox, and customer demos. Ensuring high availability while maintaining cost-effectiveness was their primary concern.

To address this, CloudifyOps, a leading cloud consulting company, designed and implemented a robust cloud security architecture using Horizontal Pod Autoscaling, Karpenter, and Node Termination Handler with AWS Spot machines. This approach optimized resource utilization, enhanced security, and reduced infrastructure costs by approximately 40%.

In this blog, we will walk you through the solution we implemented and how we overcame the challenges, providing valuable insights into cost optimization, scalability, and secure cloud deployment strategies.

The entire non-production cluster was running on on-demand instances. Since the number of nodes varies based on the usage of the environments, the customer was able to reserve only a base number of instances and ended up paying more for the on-demand ones. To address this, we introduced the usage of Spot Instances.

Then came the problem of effective scaling. Since these are Spot Instances, the need to anticipate losing the spot machines kicked up.

Since spot nodes are ephemeral, we needed a mechanism in place to gracefully drain the pods till the new node comes in. AWS Node Termination Handler (NTH) is used to achieve this. AWS sends an interruption warning event 2 minutes before the real interruption and the NTH drains the node once it receives the event. NTH is configured in IMDS mode which leverages the AWS instance metadata service.

We leveraged Karpenter’s auto-scaling capability to address the scenario. Karpenter has provisioners which effectively handle the node scaling. In our setup by default, the provisioner spins up a Spot node (uses one of the instance types provided in the provisioner configuration). The frequency of spot instance unavailability may vary with region, availability zone, and instance class, on an unavailability of the spot machine Karpenter spins up an on-demand node.

# This provisioner will provision general-purpose(t3a) instances

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: general-purpose

spec:

requirements:

# Include general purpose instance families

- key: node.kubernetes.io/instance-type

operator: In

values:

- t3.xlarge

- t3.2xlarge

- t3a.xlarge

- t3a.2xlarge

- t2.xlarge

- t2.2xlarge

- key: karpenter.sh/capacity-type

operator: In

values:

- spot

- on-demand

- key: kubernetes.io/os

operator: In

values:

- linux

- key: topology.kubernetes.io/zone

operator: In

values: [us-east-1a, us-east-1b]

providerRef:

name: default

Here, you can see that we are giving capacity as spot and then on-demand which does the magic for us

One of the concerns was how to handle the taints in the node. The spinned-up node should also have the taints in place so that the tolerated pods will be deployed into it. This was handled by adding the taints configuration in Karpenter. You can see this below.

spec:

taints:

– effect: NoSchedule

key: workload/cpu-accelerated

value: “true”

This will make sure that the node is tainted. Make sure you create two provisioners, one for the general purpose (node with no taints) and the other dedicated for the tainted nodes.

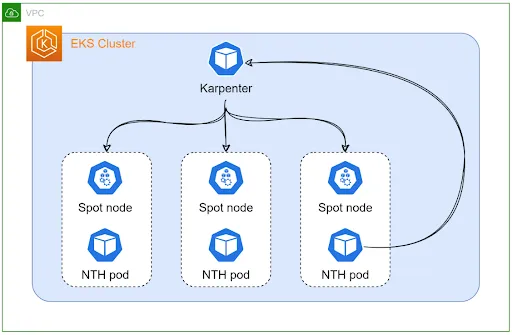

Karpenter will be constantly monitoring the cluster for any need for nodes. When there is a shortage of nodes, it spins up the spot node for us. When a spot interruption happens, NTH will identify the same from the instance metadata and will start draining the node. Upon draining the nodes, the pod will go into a pending state and Karpenter will get notified. Hooyah! We have a new node in place. We kept the EKS node group with only 2 nodes which was tainted to accommodate only the critical workloads, including the addons.

This solution is implemented in the customer environment and it has been doing its charm for the past 8 months and counting. We were able to bring down the non-production EKS infrastructure cost by 40%. Also, we reduced the number of Availability Zones (AZ) from three to two to save inter-AZ data transfer costs, and keeping one AZ would have saved us more cost but we had to ensure high availability at the same time.

CloudifyOps Pvt Ltd, Ground Floor, Block C, DSR Techno Cube, Survey No.68, Varthur Rd, Thubarahalli, Bengaluru, Karnataka 560066

CloudifyOps Pvt Ltd, Cove Offices OMR, 10th Floor, Prince Infocity 1, Old Mahabalipuram Road, 50,1st Street, Kandhanchavadi, Perungudi, Chennai, Tamil Nadu - 600096

CloudifyOps Inc.,

200, Continental Dr Suite 401,

Newark, Delaware 19713,

United States of America

Copyright 2024 CloudifyOps. All Rights Reserved