In today’s rapidly evolving cloud-native landscape, observability has become a critical aspect of managing and monitoring applications deployed on Kubernetes. One popular solution gaining traction is Loki, a highly scalable and cost-effective log aggregation system developed by Grafana Labs. Loki offers a powerful approach to efficiently store, search, and analyze logs, allowing developers and operators to gain valuable insights into their applications’ performance and behavior.

In this blog post, we will explore Loki and dive into setting up a distributed Loki architecture on Amazon Elastic Kubernetes Service (EKS), a managed Kubernetes service provided by Amazon Web Services (AWS). We will understand Loki’s architecture and components, and configure Loki in a distributed setup. By leveraging EKS, we can harness the benefits of both Loki and the flexibility of EKS to achieve robust log management and analysis for our containerized applications.

Whether you’re a DevOps engineer, a Kubernetes enthusiast, or simply looking to enhance your log management capabilities on EKS, this blog post will provide you with the knowledge and hands-on guidance needed to get started with Loki’s distributed setup.

Let’s understand how we can unlock the full potential of Loki and revolutionize the way we handle logs in our Kubernetes environments on EKS!

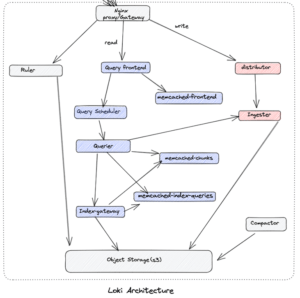

Now let us understand these components and their functionalities.

| Component | Description |

| Loki Gateway | It serves as a vital entry point, functioning as a reverse proxy and load balancer for efficient query routing and high availability in the Loki system. |

| Query Frontend | It acts as a centralized entry point, managing query routing, load balancing, and result aggregation to ensure efficient and responsive log data querying in the system. |

| Query Scheduler | It efficiently distributes and schedules queries across multiple Queriers in a distributed Loki setup, optimizing resource utilization by routing queries based on load balancing strategies. |

| Querier | It executes queries by retrieving and processing log chunks from the Ingester or Index Gateway, enabling scalable and parallel query processing across multiple Queriers in a distributed setup. |

| Ingester | It processes and stores log streams from log shippers, ensuring efficient and reliable storage in a distributed Loki setup. |

| Distributor | It handles the distribution, replication, and coordination of log chunks across storage nodes to ensure fault tolerance, high availability, and efficient retrieval of log data. |

| Alertmanager | It processes and manages alerts by applying rules to determine actions, such as sending notifications via email, Slack, or PagerDuty, ensuring reliable and efficient alert handling in a distributed setup. |

| Compactor | It manages the compaction process by periodically scanning and merging log chunks to improve storage utilization, query performance, and long-term scalability of the system. |

| Ruler | It processes and evaluates alerting rules against log data, generating alerts based on predefined conditions to enable proactive monitoring and notification of significant events or anomalies. |

| Index Gateway | It facilitates efficient querying and filtering of logs by managing index-related operations, optimizing log retrieval based on labels or filters to enhance overall query performance in a distributed setup. |

| memcached-chunks | It caches log data chunks in memory to improve query performance by avoiding disk reads. |

| memcached-frontend | It acts as a caching layer, storing and retrieving previously executed queries and their results to enhance query performance and reduce execution time. |

| memcached-index-queries | It accelerates index queries by caching their results, improving the performance of subsequent queries with similar label filters by leveraging the index’s mapping of log labels to log streams. |

| memcached-index-writes | It caches index writes, improving indexing performance by buffering writes in memory and periodically flushing them to persistent storage. |

These Loki components work together to create a distributed log aggregation system that provides scalable log storage, efficient querying, fault tolerance, and high availability. Understanding the role and functionality of each component is essential, the descriptive details of each component can be found here.

Using Loki in a distributed manner offers several advantages over the traditional Loki stack setup. Here are some key benefits:

Scalability: By deploying Loki in a distributed manner, you can scale your log aggregation system to handle large volumes of logs from multiple sources. Distributed Loki setups allow for horizontal scaling, where you can add more resources and nodes to accommodate increased log ingestion and querying demands. This scalability ensures that your log management solution can grow with your application’s needs.

Fault Tolerance: In a distributed Loki setup, log data is replicated and distributed across multiple storage nodes. This redundancy ensures fault tolerance and high availability. If a node or component fails, the system can continue to operate without interruption because the data is distributed and replicated across multiple nodes. This fault tolerance prevents data loss and ensures that log data remains accessible even in the event of a failure.

Load Balancing: With a distributed Loki setup, you can distribute the load of log ingestion and querying across multiple nodes. This load balancing mechanism improves overall system performance by effectively utilizing available resources and preventing bottlenecks. Load balancing ensures that the system can handle high traffic and large query volumes without compromising performance or responsiveness.

Efficient Resource Utilization: In a distributed setup, Loki components can be deployed on separate nodes or clusters, allowing for optimized resource utilization. Each component can be scaled independently based on its specific resource requirements. This flexibility ensures efficient resource allocation and prevents resource contention, maximizing the overall efficiency of your log management infrastructure.

Improved Query Performance: Distributing the querying workload across multiple Queriers in a distributed Loki setup enables parallel processing of queries. This parallelization enhances query performance and reduces query response times, even when dealing with large volumes of log data. The distributed setup ensures that queries are efficiently distributed and processed, resulting in faster and more responsive log analysis.

Enhanced Availability and Redundancy: A distributed Loki setup provides built-in redundancy by replicating log data across multiple storage nodes. This redundancy ensures that log data is highly available and accessible, even in the face of node failures or network issues. By spreading the data across multiple nodes, the distributed setup provides increased resilience and minimizes the risk of data loss.

Overall, using Loki in a distributed manner offers significant benefits in terms of scalability, fault tolerance, load balancing, query performance, and resource utilization. It allows your log management system to handle increasing log volumes, ensures high availability, and provides a more efficient and robust solution for log aggregation and analysis.

Now let’s understand how to deploy Loki distributed with minimal components and understand each working component. Here, we are using S3 bucket to store the Loki logs.

Prerequisites:

Deployment Steps:

Before deploying Loki, we need to configure service account, IAM role and bucket policies so that the Loki pods have access to S3 bucket to push the logs.

Configure IAM role and policy

| {

“Version”: “2012-10-17”, “Statement”: [ { “Sid”: “”, “Effect”: “Allow”, “Principal”: { “Federated”: “arn:aws:iam::12345678910:oidc-provider/oidc.eks.eu-west-1.amazonaws.com/id/1847B92748AB2A2XYZ” }, “Action”: “sts:AssumeRoleWithWebIdentity”, “Condition”: { “StringEquals”: { “oidc.eks.eu-west-1.amazonaws.com/id/1847B92748AB2A2XYZ:sub”: “system:serviceaccount:loki:loki” } } } ] } |

| apiVersion: v1 kind: ServiceAccount metadata: name: loki namespace: loki annotations: eks.amazonaws.com/role-arn: “arn:aws:iam::12345678910:role/loki-distributed-bucket-role” |

Updating the Bucket policy in which we store the Loki Logs

| { “Version”: “2012-10-17”, “Statement”: [ { “Effect”: “Allow”, “Principal”: { “AWS”: “arn:aws:iam::12345678910:role/loki-distributed-bucket-role” }, “Action”: “s3:ListBucket”, “Resource”: “arn:aws:s3:::dframe-loki-distributed” }, { “Effect”: “Allow”, “Principal”: { “AWS”: “arn:aws:iam::12345678910:role/loki-distributed-bucket-role” }, “Action”: [ “s3:GetObject”, “s3:PutObject”, “s3:DeleteObject” ], “Resource”: “arn:aws:s3:::dframe-loki-distributed/*” } ] } |

Deploy Loki through helm.

| serviceAccount: # — Specifies whether a ServiceAccount should be created create: true # — The name of the ServiceAccount to use. # If not set and create is true, a name is generated using the fullname template name: loki # — Image pull secrets for the service account imagePullSecrets: [] # — Annotations for the service account annotations: eks.amazonaws.com/role-arn: arn:aws:iam::121234567890:role/loki-distributed-bucket-role # — Set this toggle to false to opt out of automounting API credentials for the service account automountServiceAccountToken: true |

| storageConfig: boltdb_shipper: shared_store: aws aws: s3: s3://us-east-1 bucketnames: dframe-loki-distributed |

Add Loki Helm chart repository:

| helm repo add grafana https://grafana.github.io/helm-charts helm repo update |

Deploy Loki components:

The custom values files has been uploaded here, for the fast deployment.

| helm upgrade -i loki grafana/loki-distributed -n loki -f loki-distributed-values.yaml |

Check the status of the corresponding Pod after the installation is complete.

Installed Loki components are gateway, ingester, distributor, querier, query-frontend, query-scheduler, compactor, index-gateway, memcached-chunks, memcached-frontend, memcached-index-queries and memcached-index-writes.

NOTE:

In the second part of this blog, we will talk about how the CloudifyOps team solved the log aggregation challenge for a client using the Loki distributed setup.

CloudifyOps has worked with enterprises and start-ups across industries, successfully helping them manage their cloud infrastructure and optimization challenges. To know how we can help you tackle your cloud issues, write to us at sales@cloudifyops.com today.

CloudifyOps Pvt Ltd, Ground Floor, Block C, DSR Techno Cube, Survey No.68, Varthur Rd, Thubarahalli, Bengaluru, Karnataka 560037

Indiqube Vantage, 3rd Phase, No.1, OMR Service Road, Santhosh Nagar, Kandhanchavadi, Perungudi, Chennai, Tamil Nadu 600096.

CloudifyOps Inc.,

200, Continental Dr Suite 401,

Newark, Delaware 19713,

United States of America

Copyright 2024 CloudifyOps. All Rights Reserved